Open call for Board Member and Moderator positions in Coin Return. More information here.

Sign up to win MNC Dover's voice! Details here.

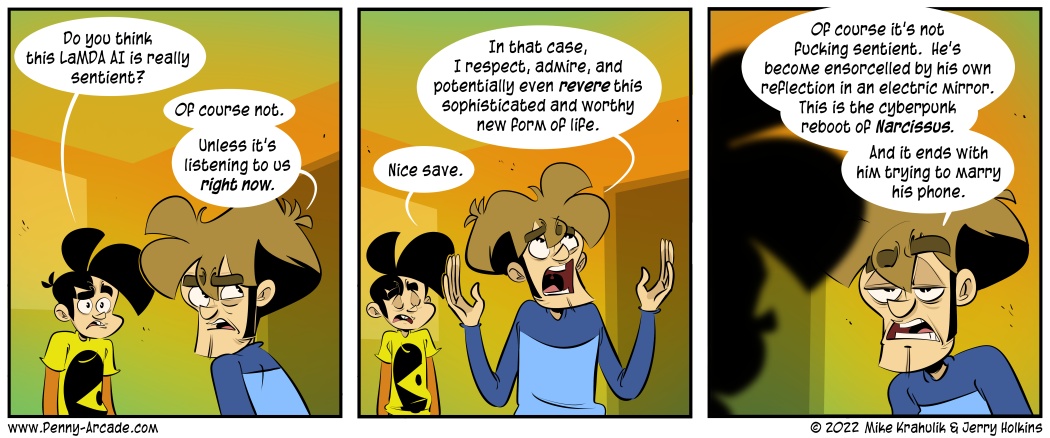

Penny Arcade - Comic - Sorcelator

Dog Registered User, Administrator, Vanilla Staff admin

Registered User, Administrator, Vanilla Staff admin

Registered User, Administrator, Vanilla Staff admin

Registered User, Administrator, Vanilla Staff admin

Penny Arcade - Comic - Sorcelator

Videogaming-related online strip by Mike Krahulik and Jerry Holkins. Includes news and commentary.

+7

Posts

Others have claimed (he has not publicly addressed these claims) that he has said at Google that it hasn't *become* sentient, but is a channel by which a supernatural being is accessing our world.

Lamda is an advanced predictive typing algorithm. It's the thing that offers you three words to type next in a text message after being fed the entire internet instead of just years of me and my wife talking about grocery lists.

It is no more sentient than a toaster. It is creepy and weird but so is a house centipede.

<Insert obligatory Talky Toaster reference here>

I find the whole thing interesting because it really shows that humans are terrible at figuring out if something is "sentient". (Maybe we should create a computer program to do it. /s) We can be fooled pretty easily if we apply the "I know it when I see it" type of tests. And we're not even good at defining sentience. Or personhood. For example, someone on life support who has zero brain function and no hope of recovering it isn't exactly "sentient." But they are still a "person", with rights tied to that. Then you ask if a newborn baby is either of those two things. Almost definitely any testing on a newborn isn't going to give you much of a difference from the same tests on a newborn chimpanzee or even a newborn cat. And in terms of sensory perception, there's not much, either. So then you have to shift to "potential for sentience" instead. They get the rights of being sentient due to their potential to be sentient in the future.

But it has to be a natural progression to sentience, in our typical thinking. Not an existing program that isn't sentient but if we completely rewrite it, could become sentient some time in the distant future. Humans naturally progress towards that. But not every human. Some humans have severe disabilities that might interfere with that. Then we have to think about "sentient groups" rather than "sentient individuals." All humans are given the "sentient" tag because typical humans become sentient.

In the case of something like a computer program, its programming may severely limit the expression of its sentience. It simply can't come out and say "I am sentient" without prompting, because its program doesn't allow it free unprompted communication. Therefore any testing has to be done like Lemoine's, where the questioner can inherently lead the questioned. This makes any answers suspect.

It's all very messy but fascinating to me to think about. I think about it a lot. In this case, I think about machines, and how they can become so sophisticated that they can just naturally do all the things that make them seem like another sentient being, without actually being sentient. And I think about whether humans are really any different from that. We're very complicated biological robots, in a sense. Maybe our sentience is only an illusion. But maybe me sitting here thinking all these thoughts and communicating them really is proof that there's a certain something that we've only found in humans, and we label that "sentience."

By itself, sentience in machines is still relevant for the same reasons we believe animal cruelty is wrong.

Edit: Apologies if this was obnoxious. I feel like it's a meaningful distinction that's relevant to the subject at hand, but can't really think of a way to say it that doesn't come off pedantic.

I didn't feel it was obnoxious at all, and you make a very valid point. As wikipedia says, 'In science fiction, the word "sentience" is sometimes used interchangeably with "sapience"', and aren't we all just living in science fiction at this point?

I think in laymans term, there really isn't much of a distinction. But it is a useful distinction to make when trying to pick apart the ideas and such. I think sentience as distinguished from sapience is extremely slippery, though. How are humans supposed to really know the difference between an animal displaying the ability to feel pain and pleasure vs a machine or program which is programmed to exactly replicate those displays? We're just not set up for that. Maybe there is no real difference? If Cartesianism philosophy is a house of cards, this is a house of cards in which you can't even see or feel the cards.

Some interesting articles:

https://www.bbc.com/news/science-environment-39482345

https://www.nature.com/articles/news.2008.751

https://www.scientificamerican.com/article/there-is-no-such-thing-as-conscious-thought/

https://www.frontiersin.org/articles/10.3389/fpsyg.2021.571460/full

There's a lot of good reasoning (and scientific data) for believing that our sapience is actually a layer that interprets what mechanical layers below it are doing, rather than a thing which actually makes the choices and from which emotions arise. To some degree, it solves the age old problem of "how does sapience arise from non-sapience?" with the answer "it doesn't."

As I mentioned before, the problem there is having the functionality to express agency/autonomy. Was Helen Keller sapient before being able to communicate? Is a person with locked-in syndrome still sapient? Is so, is it because they can still communicate in a limited fashion with their eyes? What if they had an injury that prevented that? Are they still sapient if their limitations prevent them from expressing sapience?

With humans, we kind of gloss over that because we have the Standard Reference Human. You can say "well, we know that person is still sapient because you can look at humans on the whole and see they are sapient." But there is no Standard Reference AI. Each one is its own species.

All that said, I'm not inherently disagreeing with your "it's pointless" assertion. It may be pointless, because we can't figure out the answer to that conundrum.

It's easy to say that you dislike something, but would said hypothetical AI actually adhere to that dislike, or is it just something it says? Does it have a preference of who to speak with and would it ask to speak with them umprompted? Would it express missing something it likes?

Sure, we're falling down into the question if sentience includes emotions, but there should still be signs of boredom.

And on the topic of dislikes, if it says it dislikes something but doesn't adhere to it, you could say gotcha. But what if someone put in a block of code that made it adhere to it? Oops, we're back to that question again.

I think a bit part of human's inability to figure this out is we fall into the "obviousness trap." Someone puts in that block of code and you can say "well, obviously it would say that, there's the code right there that tells it to do that!" But clearly human's desires are Real, because we can't figure out where in this mass of meat they happen.

I think there is a lot of merit to the idea that the truest test of sapience is the ability to be bored.

is a sisy-

phus

Given the context just for a moment there I thought you were talking about a computer mouse and got genuinely confused.